Founded in 2016 and headquartered in New York,

Hugging Face has

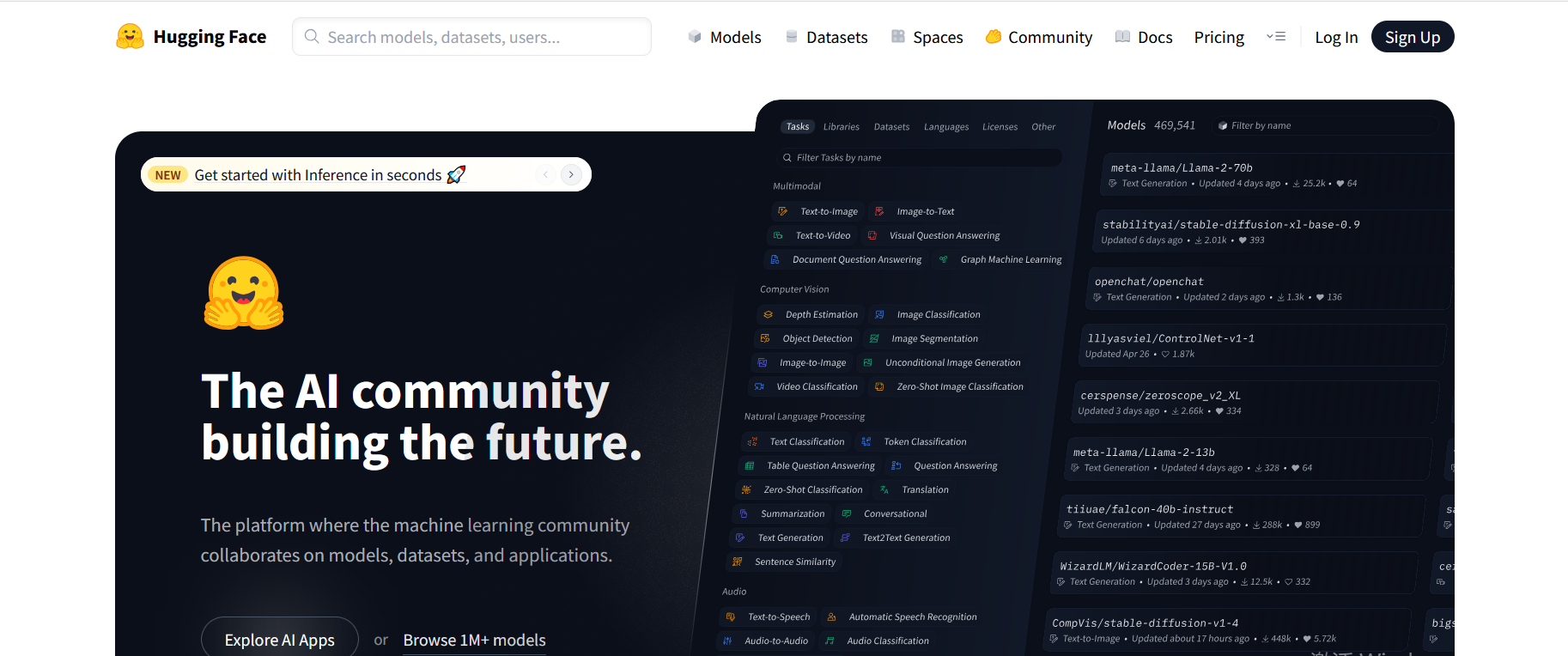

evolved from a chatbot startup to the world's leading open platform for

machine learning (ML).

Hugging Face official Website: https://huggingface.co

Its core offerings include:

Model Hub: Hosts 500,000+ pre-trained models (as of 2025) spanning NLP, computer vision, and multimodal AI

Datasets Library: Curates 50,000+ datasets with version control

Inference API: Enables cloud-based model deployment with pay-as-you-go pricing

Spaces: Allows developers to build/share ML demo apps (150,000+ hosted as of 2025)

2. Technical Architecture

2.1 Transformers Library

The open-source transformers library (50M+ downloads/month) provides:

Unified APIs for 100+ architectures (BERT, GPT, Stable Diffusion, etc.)

Support for PyTorch, TensorFlow, and JAX frameworks

Tokenizers optimized for 200+ languages

2.2 Hardware Optimization

Accelerate: Simplifies distributed training across GPUs/TPUs

Optimum: Enhances performance for Intel, Habana, and NVIDIA hardware

ONNX Runtime: Reduces latency by 40-60% in production deployments

3. Community Impact

3.1 Open-Source Ecosystem

Collaborative Model Development: 80% of SOTA NLP models published via Hugging Face

Dataset Cards: Standardized documentation improves reproducibility

Model Cards: Ethical usage guidelines for sensitive applications

3.2 Enterprise Adoption

Used by 50% of Fortune 500 tech companies for:

Private model hosting with SSO/SAML integration

Custom CI/CD pipelines for MLOps

Security features like model signing and audit logs

4. Future Directions

AI Governance Tools: Bias detection and regulatory compliance modules

Low-Code Interfaces: Drag-and-drop fine-tuning for non-engineers

Physical World Integration: Robotics transformers library (beta)