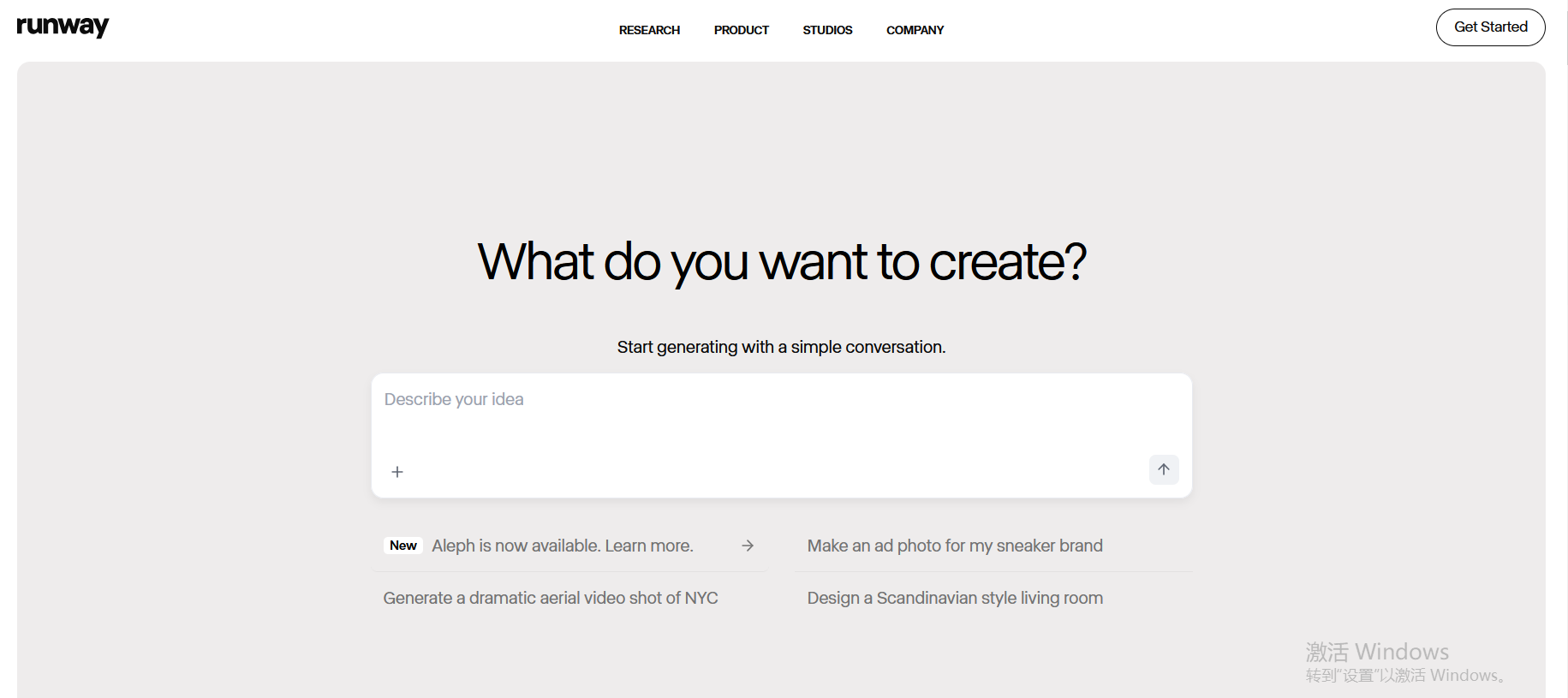

Runway ML is a cloud-based platform that democratizes artificial

intelligence for creative professionals, offering 100+ AI models for

video/image generation, editing, and multimodal synthesis. Founded in

2018 by artists and engineers, it bridges the gap between cutting-edge

ML research and practical creative workflows.

Runway ML official Website: https://runwayml.com

Technical Architecture

Hosts curated neural networks including Stable Diffusion (v3.0), GPT-4o Vision, and proprietary models like Gen-3 for video synthesis

Unified API architecture allows seamless switching between diffusion models, GANs, and transformer-based tools

On-demand GPU scaling via partnerships with AWS and Google Cloud

Real-Time Collaboration Engine

Version-controlled project system with Adobe Creative Cloud integration

Frame-accurate video editing AI that preserves temporal coherence

Multi-user editing with conflict resolution algorithms

Core Feature Breakdown

AI Video Tools

• Motion Brush: Animate static images with vector paths

• Inpainting: Object removal/replacement at 4K resolution

• Lip Sync: Audio-driven facial animation with 95% accuracy

Image Synthesis

• Style Transfer: 800+ preset artistic filters

• Depth Control: 3D scene reconstruction from 2D images

• AI Upscaling: 8x resolution enhancement with detail hallucination

Enterprise Solutions

• Frame.io integration for studio pipelines

• Ethical AI watermarking compliant with C2PA standards

• Custom model training via no-code interface

Industry Impact (2025 Data)

Media Production: Used in 60% of Oscar-nominated VFX films this year

Education: Adopted by 1,200+ universities for digital arts curricula

Startup Ecosystem: 3,500+ indie game studios leverage its asset generation

"Runway represents the Photoshop moment for generative AI" — Wired, 2024

No disclosure

The platform's upcoming "Creative Agent" (beta Q4 2025) promises autonomous storyboarding by interpreting natural language prompts, potentially disrupting traditional pre-production workflows.